MAB vs. MVT: Choosing the right experimentation method for smarter marketing

Published on October 28, 2025/Last edited on October 28, 2025/13 min read

Team Braze

Marketers are under pressure to make decisions quickly, but testing can feel like it slows everything down. Wait too long for results, and the opportunity may be gone; move too fast, and you risk making calls without enough insight. That tension is why many teams struggle to balance speed with precision.

Multi-armed bandit testing (MAB) and multivariate testing (MVT) offer different ways to solve the problem. One adapts in real time, directing more traffic to the best-performing option. The other digs deeper, showing which elements drive results across an experience. Knowing how and when to use each method can turn experimentation from a bottleneck into a driver of growth.

In this article, we’ll break down how MAB and MVT work, where they differ, and how brands are applying them across real campaigns.

Contents

What are multi-armed bandit testing and multivariate testing?

Key differences between MAB and MVT

The marketer’s experimentation loop: Online experimentation methods

Real-life marketing use cases with measurable outcomes

How Braze enables smarter experimentation

Final thoughts on MAB vs MVT testing

What are multi-armed bandit testing and multivariate testing?

Marketers know testing is important, but the methods can feel confusing. Two that often get compared are multi-armed bandit testing (MAB) and multivariate testing (MVT). Both help optimize campaigns, but they work in very different ways.

Multi-armed bandit testing (MAB)

Multi-armed bandit testing is like running an A/B test that learns as it goes. Instead of splitting traffic evenly between variations, this form of adaptive testing watches performance in real time and sends more people to the versions that are working better. This is called dynamic traffic allocation—poor-performing options quickly get less exposure, while stronger ones reach more of the audience.

MAB is built on the balance of exploration (trying different options) and exploitation (leaning into the winners). The trade-off is that you get fewer insights about why each option worked, but you maximize conversions while the test is running. This makes multi-armed bandit marketing particularly effective for campaigns where real-time optimization matters more than post-test analysis.

Multivariate testing (MVT)

Multivariate testing looks at multiple elements at once—for example, subject line, headline, and call-to-action. Rather than just comparing two or three versions of a message, it measures all the possible combinations to see which mix performs best.

The benefit is that you can see which overall version wins, and which individual elements matter most. The drawback is that MVT requires larger sample sizes and more time, since it has to test so many combinations before producing clear results.

MAB and MVT vs A/B testing

When comparing MAB vs A/B testing, the key difference is adaptability: traditional A/B testing splits traffic evenly—50% to version A, 50% to version B—until the test concludes. This helps calculate results with high confidence, but it also means half your audience may be exposed to a weaker option for the entire duration of the test.

Both MAB and MVT were designed to address these limitations. MAB adapts as results come in, directing more traffic to stronger variations in real time. MVT, on the other hand, lets you test multiple elements at once to uncover not just which version wins, but why. Together, they give marketers more flexible ways to experiment, depending on whether speed or precision matters most.

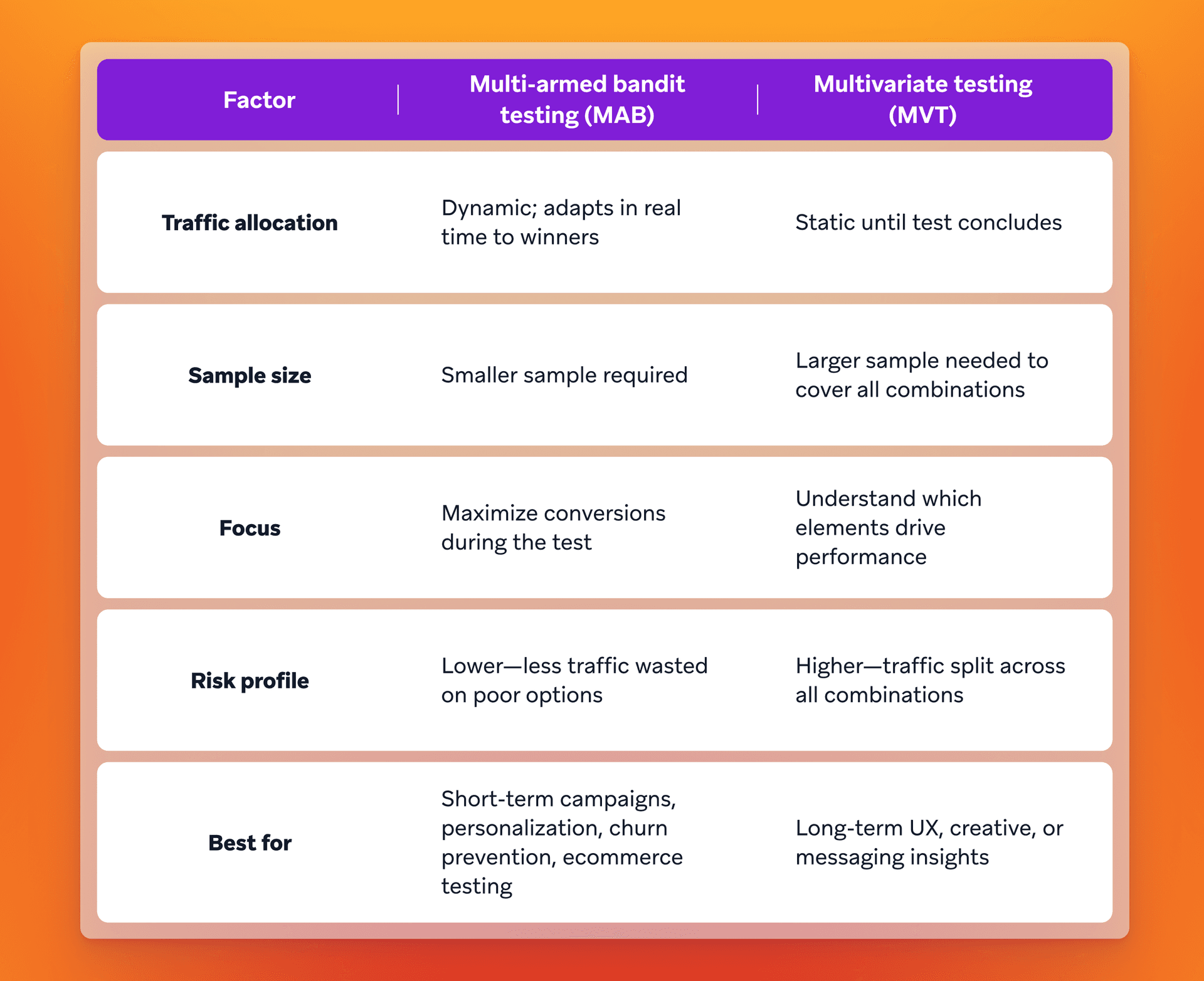

Key differences between MAB and MVT

Understanding multi-armed bandit testing vs multivariate testing means recognizing that both improve on traditional A/B testing, but in very different ways. The MAB vs MVT comparison highlights how both improve on traditional A/B testing, but in very different ways. MAB prioritizes speed and conversions during the test, while MVT prioritizes depth of learning and precision. Marketers don’t have to see them as competitors—they’re tools designed for different situations.

Speed vs. precision

- MAB reacts in real time, reallocating traffic to the best-performing option. It’s built for agility, making it a strong choice for marketers focused on test velocity and campaigns where timing is critical.

- MVT takes longer to complete because it needs large sample sizes, but the trade-off is highly detailed insights into which elements matter most.

Risk vs. rigor

- MAB lowers risk by reducing wasted traffic on underperforming options, but sacrifices some statistical certainty.

- MVT provides rigorous results about every element tested, but at the cost of exposing more traffic to weaker variants for longer.

Use cases compared

- MAB is best for short-lived campaigns, time-sensitive offers, and churn prevention nudges—any situation where speed matters.

MVT is best for design or messaging architecture, onboarding flows, and checkout optimization—where understanding the “why” behind performance is more important than instant results.

The marketer’s experimentation loop: Online experimentation methods

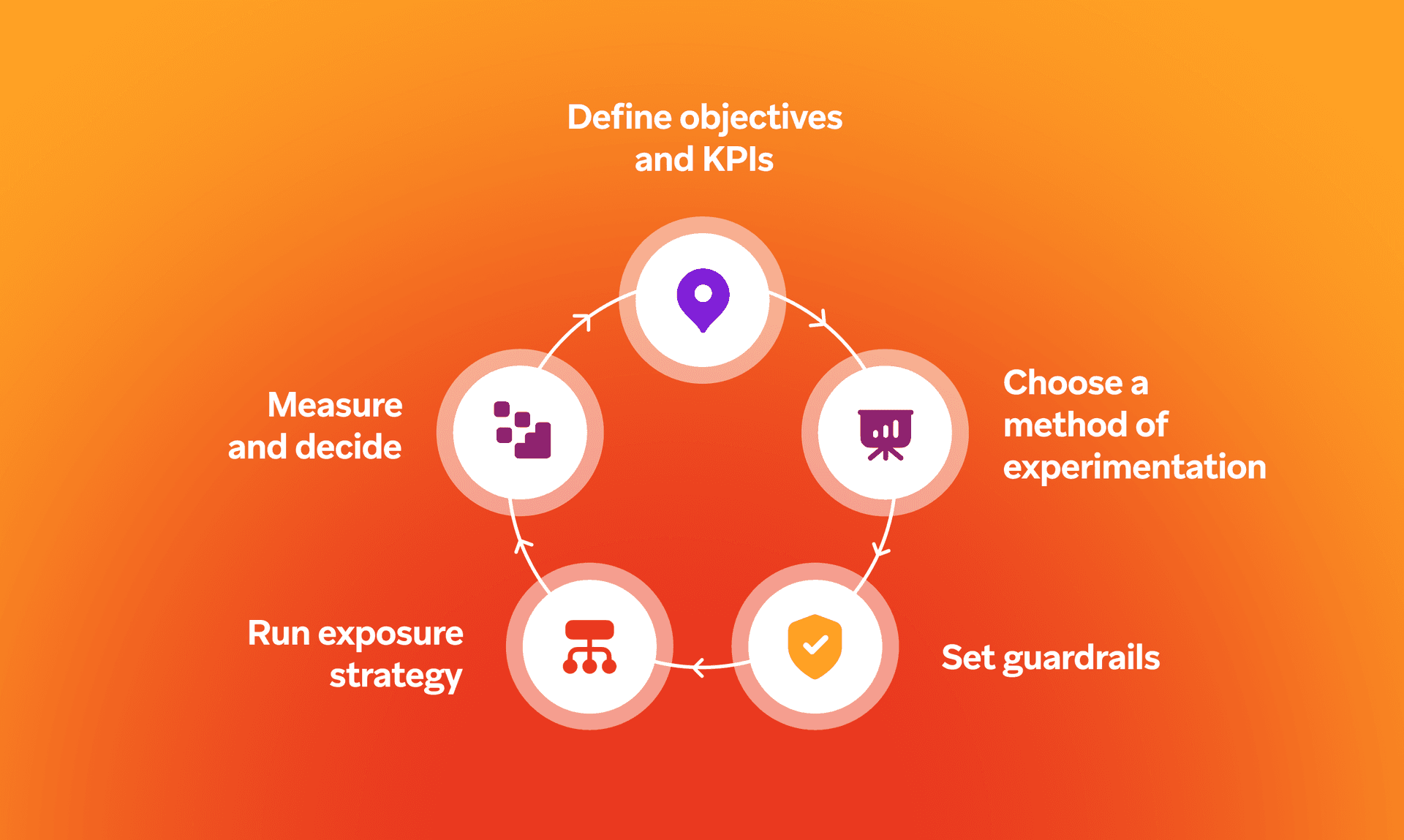

Whether you’re running MAB vs MVT experiments or other tests, results come faster when they follow a clear cycle. By approaching experiments as a loop—rather than one-off projects—marketers can move faster, limit wasted spend, and connect results directly to business goals.

Step 1: Define objectives and KPIs

Start by setting the outcome you want to influence. Whether it’s conversion rate (CVR), retention, or customer lifetime value (CLV), having a clear KPI helps shape both the method and the scale of the test.

Step 2: Choose a method

The right method depends on what matters most in the moment. Multi-armed bandit testing is designed to maximize performance during the test window, reallocating traffic to the stronger option as results come in. Multivariate testing is designed to reveal which elements—like a headline, layout, or offer—make the biggest impact.

Step 3: Set guardrails

Every experiment needs boundaries. Feature flags, frequency caps, and brand-safety checks keep tests controlled while protecting the customer experience.

Step 4: Run exposure strategy

How you roll out a test shapes both the speed and quality of learning. A bandit approach can start with a canary release and then expand as the algorithm gains confidence, making it a practical option for risk-controlled rollouts. A multivariate test typically requires larger, stable samples so each combination gets enough exposure to generate reliable insights.

Step 5: Measure and decide

Bandit tests can keep running indefinitely, constantly adapting to new data, while multivariate tests usually close with a definitive “winner.” Either way, the loop ends with a decision. Roll out what works, roll back what doesn’t, and feed the insights into the next cycle of conversion optimization.

Methods in practice

Looking at MAB vs MVT in practice shows how each method can be applied differently depending on the marketing goal. Here are some examples of how each approach could be used.

MAB testing examples: Dynamic allocation in action

This MAB testing example shows how the method works in practice: Take a 48-hour flash sale. There’s little value in waiting weeks for test results when the campaign ends in days. With multi-armed bandit testing, traffic would automatically shift toward the ad or creative performing best, while weaker options quickly get less exposure. This helps capture more revenue during the short window without needing manual changes.

Multivariate testing examples: Testing for deeper insight

Real-life multivariate testing examples often involve complex user journeys: Imagine a team refining an onboarding flow. They want to understand which mix of welcome email, in-app tip, and push notification works best to improve Day-7 retention. Multivariate testing could test all the combinations at once, highlighting not only the winning journey but also which individual elements made the biggest impact.

Hybrid strategies

Sometimes it makes sense to combine the two methods. A marketer might use MVT to pinpoint the strongest subject line and hero image pair in an email campaign. Once that insight is clear, MAB could then dynamically allocate traffic toward those top performers, adapting over time as customer behavior changes.

Real-life marketing use cases with measurable outcomes

Experimentation isn’t just theory—it drives results across industries when applied to real campaigns. The following case studies show how leading brands use Braze to test, learn, and optimize customer journeys, turning data into measurable outcomes.

BlaBlaCar shifts abandoned carts back on track for 30% more bookings

BlaBlaCar, the community-based travel network with over 100 million members worldwide, wanted to recover customers dropping off mid-booking.

The problem

High-intent users were leaving before completing their trip reservations, creating a costly conversion gap.

The solution

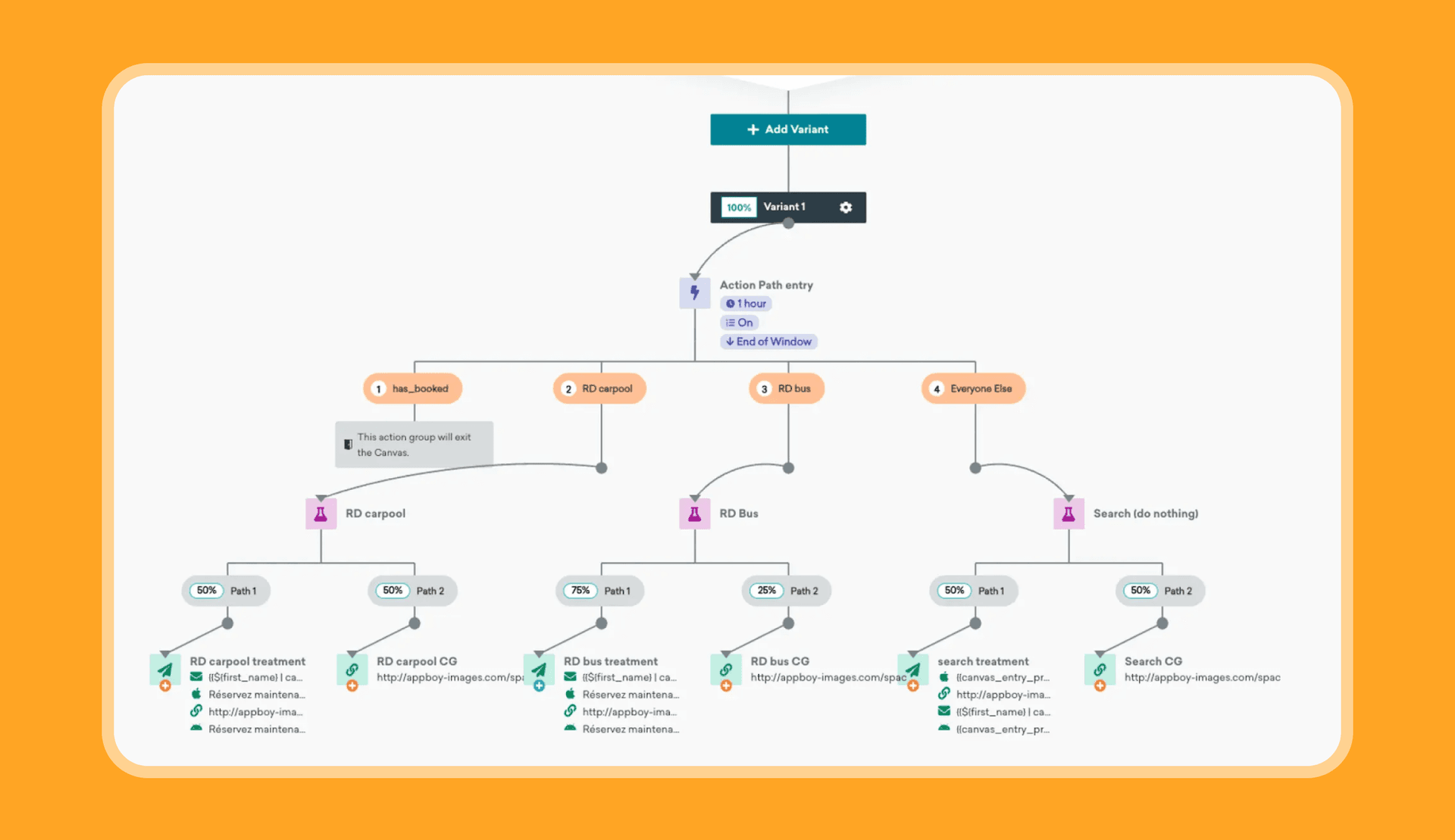

The team used Canvas to build a cross-channel abandoned cart program. With email, push, and Content Cards working together, plus personalization based on real-time behavior, they created timely nudges that guided users back to complete their booking—an approach that reflects the same principles of ecommerce testing, where small journey optimizations can deliver outsized conversion gains.

The results

These results offered clear lift measurement, with a 30% uplift in bookings and a 48% increase in click rates, proving the impact of personalized cart recovery.

KFC spices up onboarding for a 9.37X lift in lifetime revenue

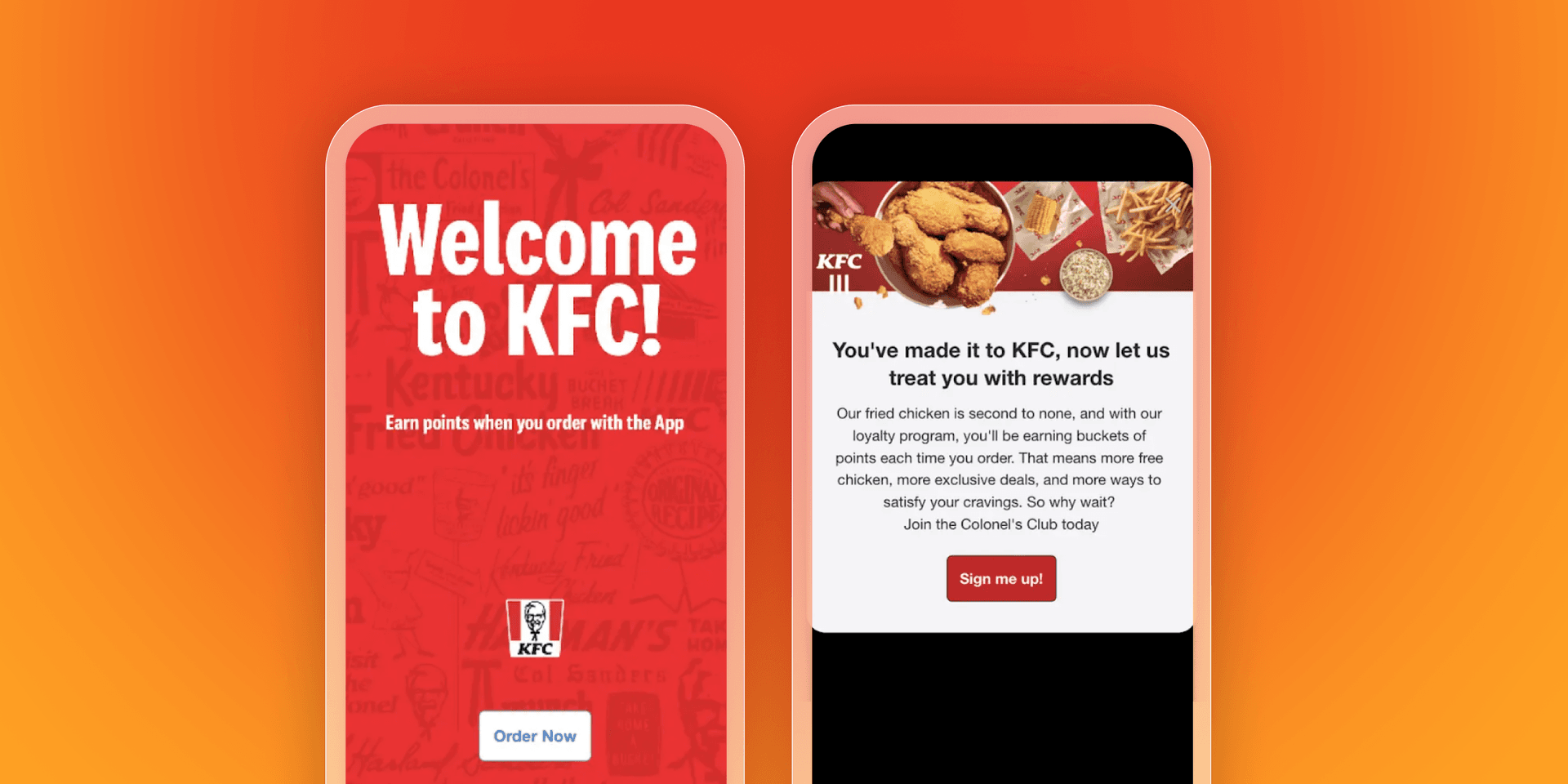

KFC Trinidad and Tobago, part of one of the world’s most recognizable quick-service brands, needed a way to better engage new app users and encourage repeat purchases. As a small team, they wanted automated campaigns that could scale impact without adding workload.

The problem

New customers weren’t always making it past the first few sessions, limiting their long-term value to the business. The team wanted a stronger onboarding journey to encourage faster repeat purchases and build loyalty.

The solution

Using Canvas, KFC Trinidad and Tobago created a mobile welcome series across email, in-app messages, and push. Personalized offers, timed nudges, and targeted promotions helped guide users from first login to first purchase and beyond. Automated experiment paths allowed the team to test messaging, while decision splits and action paths made it possible to adjust journeys based on real-time behavior.

The results

The new onboarding journey drove a 384% increase in session frequency and a 9.37X uplift in average lifetime revenue, with the welcome series accounting for 10% of daily CRM revenue. By tying onboarding to broader lifecycle campaigns, KFC Trinidad and Tobago turned early engagement into lasting value.

Clipchamp edits churn with a 600% winback boost

Clipchamp, the online video editing platform with more than 17 million users, wanted to re-engage lapsed customers and strengthen long-term loyalty.

The problem

The team faced challenges with disconnected tools, making it difficult to track engagement or deliver relevant, personalized outreach. As growth accelerated, preventing churn became a critical priority.

The solution

With Braze, Clipchamp created targeted winback campaigns for users who had let paid plans lapse. By using in-browser messaging and personalized offers, the team could test different incentives, from extended free trials to discounts. Content Cards added another channel to reach users who didn’t respond to email, ensuring messages were timely and visible.

The results

The personalized winback strategy achieved a 600% lift over the control group, proving the impact of targeting churn risk with the right offer at the right moment.

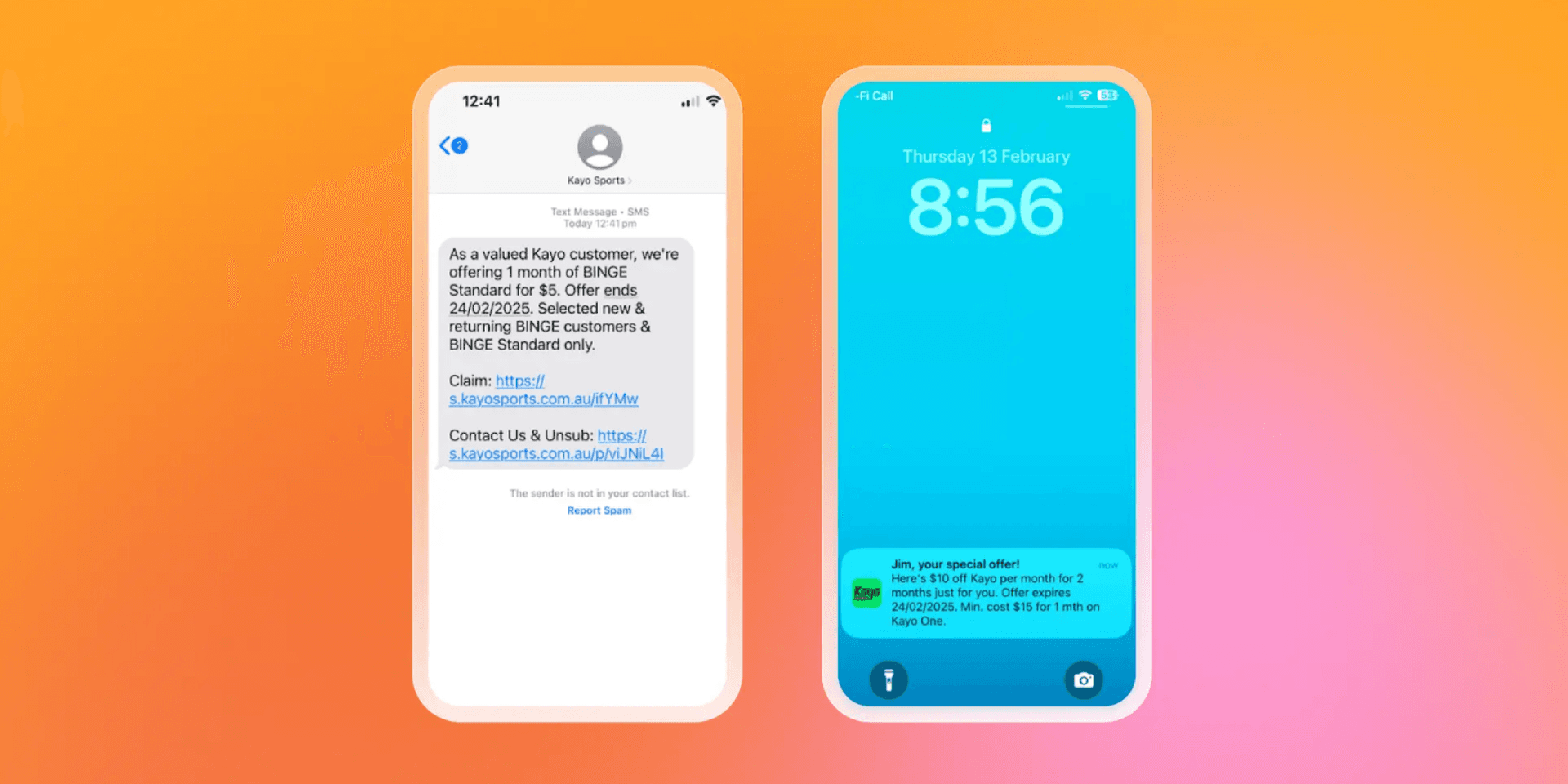

Kayo Sports scores big with AI-driven personalization, boosting subscriptions by 14%

Kayo Sports, Australia’s largest sports streaming service, wanted to move beyond generic campaigns to deliver experiences as personalized as the fans they serve. With a diverse audience watching across multiple devices, the challenge was creating tailored messaging that worked at scale.

The problem

Existing systems limited personalization and made it difficult to fully use customer data. As competition in streaming intensified, Kayo Sports needed a way to increase engagement, retention, and customer lifetime value.

The solution

By combining Braze with AI decisioning, Kayo Sports built its “Customer Cortex,” a personalization engine that determines the right message, creative, channel, and timing for each subscriber. Using Canvas to orchestrate journeys across email, push, SMS, and in-app messages, the brand scaled from hundreds of campaign variations to more than a million, all tailored to individual behavior and preferences.

The results

The new approach led to a 14% increase in subscriptions, an 8% lift in average annual occupancy, and a 105% increase in cross-sells—all achieved while raising average subscription prices by 20%. Personalized engagement proved central to building loyalty and long-term value.

How Braze enables smarter experimentation

For experimentation to have the strongest impact, it needs to be embedded into the tools marketers use every day. With Braze, marketers can put both MAB and MVT into practice inside the same ecosystem, turning experiments into always-on decisioning.

Journey orchestration

With Braze Canvas and marketing orchestration, brands can design and run multivariate tests across entire customer flows. This makes it possible to set holdouts, compare paths, and control variables across email, push, in-app, and SMS—building a clear picture of which combinations of messages and touchpoints drive the strongest results.

AI decisioning

AI decisioning brings reinforcement learning into the mix, powering adaptive, MAB-style optimization. With BrazeAI Decisioning Studio™, formerly known as OfferFit, Instead of running a one-off bandit test, AI agents continuously experiment at the individual level—deciding which channel, message, or offer to send in real time. This turns every campaign into a living experiment that learns as it goes.

Governance and observability

Experiments need to be both safe and measurable. Guardrails such as frequency capping and brand-safety checks keep campaigns under control, while dashboards provide visibility into metrics like CVR, retention, and CLV. The result is experimentation that not only adapts but does so responsibly.

Final thoughts on MAB vs MVT testing

Marketers face constant trade-offs between moving quickly and learning deeply. Multi-armed bandit testing and multivariate testing offer different ways to manage that balance—and together, they create space for both agility and rigor.

What matters most is weaving experimentation into everyday engagement, building a test-and-learn culture where campaigns keep improving not just at launch but over time. With Braze, that kind of continuous optimization and connected testing becomes possible across the customer journey.

MAB vs MVT testing FAQs

What is the difference between MAB and MVT?

The difference between MAB and MVT is in how they allocate traffic and generate insights. MAB reallocates traffic dynamically to the best-performing option during a test, while MVT tests multiple elements at once to show which combination works best.

When should marketers use MAB vs. MVT?

Marketers should use MAB when campaigns are time-sensitive, such as flash sales or churn-prevention nudges, because it adapts in real time. Marketers should use MVT when they need precise insight into which design, creative, or messaging elements have the biggest impact.

What are the pros and cons of MAB for marketing?

The pros and cons of MAB for marketing are clear. The main advantage is speed—MAB maximizes conversions while the test is still running. The drawback is less precision in understanding exactly why a variant performed better.

How does MVT help optimize campaigns?

MVT helps optimize campaigns by testing multiple elements at the same time. This allows marketers to see not just which version wins, but which individual components—such as layout, CTA, or creative—drive results.

Can MAB and MVT work together?

MAB and MVT can work together as complementary methods. Marketers can use MVT to identify strong elements and then apply MAB to dynamically allocate traffic toward those winning combinations in real time.

How does Braze and BrazeAI Decisioning Studio™ support both methods in practice?

Braze and BrazeAI Decisioning Studio™ support both methods in practice by embedding them into the customer engagement platform. Braze enables MVT through journey orchestration, while BrazeAI Decisioning Studio™ delivers MAB-style optimization with AI decisioning. Together, they make experimentation continuous and tied directly to KPIs like CVR, retention, and CLV.

Be Absolutely Engaging.™

Sign up for regular updates from Braze.

Related Content

Article13 min read

Article13 min readBraze vs Salesforce: Which customer engagement platform is right for your business?

February 19, 2026 Article18 min read

Article18 min readBraze vs Adobe: Which customer engagement platform is right for your brand?

February 19, 2026 Article7 min read

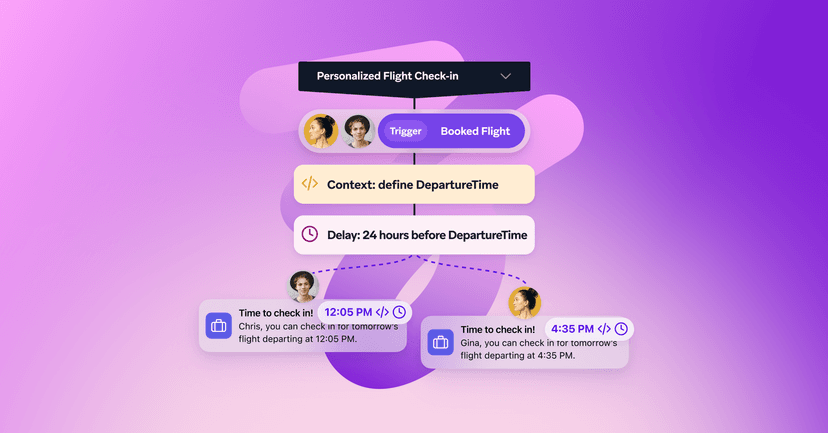

Article7 min readEvery journey needs the right (Canvas) Context

February 19, 2026